Sunday, August 27, 2006

Make your password safe on the Internet

Seeking to stem the proliferation of phishing scams, researchers at Stanford University have developed a simple stuff to prevent a stolen password from being used to access an authentic site.

Seeking to stem the proliferation of phishing scams, researchers at Stanford University have developed a simple stuff to prevent a stolen password from being used to access an authentic site.Phishing is a form of criminal activity using social engineering techniques. Phishers attempt to fraudulently acquire sensitive information, such as passwords and credit card details, by masquerading as a trustworthy person or business in an electronic communication. More on Wikipedia...

"Most of Internet users often use the same password at many sites," realizes Dan Boneh, an associate professor of computer science at Stanford. "A phishing attack on one site will expose their passwords at many other sites."

The Anti-Phishing Working Group identified nearly 12,000 malicious phishing sites in May 2006, up from 3,300 sites just one year earlier.

The technique, known as Password Hash, simply consists in adding "@@" at the beginning of your password, to indicate to the software that you're typing a password. Then the software does its job: combine your password to the site's domain name thanks to cryptography. Then the password really provided to the website is not the one you typed and if it is stolen, it won't work on the authentic website.

Adding a cryptographic hash is not a new idea, but the novel part of the researchers' work was to make it so easy for end users (us) to apply. Indeed, one can find PwdHash as an add-on for Firefox, or as a plugin for Internet Explorer 6. The official website is here.

Source: ComputerWorld, last week.

Labels: security

Saturday, August 26, 2006

Internet 2 ... he's back and tells why

Researchers involved in the project Moonv6, the world’s largest native IPv6 test, have demonstrated that the Network Time Protocol runs over IPv6, the long anticipated upgrade to the Internet’s main protocol.

Here is a intro sentence which leaves you dubitative.

It is done purposely.

Founded in 2002, Moonv6 is a joint operation of the University of New Hampshire, the U.S. Defense Department, the North American IPv6 Task Force and the Internet2 university consortium. 'Cause Yes, Internet 2 is a reality, and is a non-profit consortium which develops and deploys advanced network applications and technologies, mostly for high-speed data transfer. Its purpose is to develop and implement applications and technologies up to 10 Go/s as IPv6, IP multicasting, Quality of Service. The goal is not to create a separated network but to ensure that the new applications can work on the current Internet.

And IPv6 ? pronounce Internet Protocol version 6.

Because of a shortage of address on current protocol IP (version 4), but also to solve some of the problems revealed by its use on large scale, the transition towards IPv6 started in 1995. Among the essential innovations, one can quote:

The Network Time Protocole should less interest you. It is a protocol for synchronizing the clocks of computer systems over packet-switched, variable-latency data networks. NTP uses UDP port 123 as its transport layer. It is designed particularly to resist the effects of variable latency.

Back to the news: researchers set up a wide-area link between the University of New Hampshire and the military’s Joint Interoperability Test Center at Fort Huachuca, Ariz. to run NTP over both regular IP (known as IPv4) and the emerging IPv6.

"This is the first time anyone has demonstrated NTP over an IPv6 WAN," says Erica Williamsen, an IPv6 engineer at the UNH Interoperability Lab. "Both sites were able to synchronize time."

Voila, great, we learn many things about the Internet 2.

Source: NetworkWorld, last week.

For those who do not know:

- As example of this high-speed network that is Internet2, these researchers could download 860 Go in less than twenty minutes. Equivalent of 180 DVDs in a small van which goes through Brussels.

- With the new protocol IPv6, 2128 fixed addresses will be availables on the Internet. Will it be enough? In the future, should we foresee another shortage? Actually, that represents 667 millions of billions addresses by mm² on the Earth.

Here is a intro sentence which leaves you dubitative.

It is done purposely.

Founded in 2002, Moonv6 is a joint operation of the University of New Hampshire, the U.S. Defense Department, the North American IPv6 Task Force and the Internet2 university consortium. 'Cause Yes, Internet 2 is a reality, and is a non-profit consortium which develops and deploys advanced network applications and technologies, mostly for high-speed data transfer. Its purpose is to develop and implement applications and technologies up to 10 Go/s as IPv6, IP multicasting, Quality of Service. The goal is not to create a separated network but to ensure that the new applications can work on the current Internet.

And IPv6 ? pronounce Internet Protocol version 6.

Because of a shortage of address on current protocol IP (version 4), but also to solve some of the problems revealed by its use on large scale, the transition towards IPv6 started in 1995. Among the essential innovations, one can quote:

- the increase from 232 to 2128 of available addresses ;

- mechanisms of configuration and automatic renumerotation ;

- IPsec, QoS and multicast ;

- the simplification of the headings of packages, which facilitates in particular the routing.

The Network Time Protocole should less interest you. It is a protocol for synchronizing the clocks of computer systems over packet-switched, variable-latency data networks. NTP uses UDP port 123 as its transport layer. It is designed particularly to resist the effects of variable latency.

Back to the news: researchers set up a wide-area link between the University of New Hampshire and the military’s Joint Interoperability Test Center at Fort Huachuca, Ariz. to run NTP over both regular IP (known as IPv4) and the emerging IPv6.

"This is the first time anyone has demonstrated NTP over an IPv6 WAN," says Erica Williamsen, an IPv6 engineer at the UNH Interoperability Lab. "Both sites were able to synchronize time."

Voila, great, we learn many things about the Internet 2.

Source: NetworkWorld, last week.

For those who do not know:

- As example of this high-speed network that is Internet2, these researchers could download 860 Go in less than twenty minutes. Equivalent of 180 DVDs in a small van which goes through Brussels.

- With the new protocol IPv6, 2128 fixed addresses will be availables on the Internet. Will it be enough? In the future, should we foresee another shortage? Actually, that represents 667 millions of billions addresses by mm² on the Earth.

Labels: internet

Thursday, August 24, 2006

the digital beautification algorithm

One more application introduced at the SIGGRAPH conference in Boston !

One more application introduced at the SIGGRAPH conference in Boston !After the image deblurrer and the 3D generation, here is an algorithm which is capable to enhance the appearance of a human face in a photograph within a few minutes.

Researchers of the University of Tel Aviv in Israel developed the "digital beautification" algorithm. A treatment which (caution!) does not make substantial changes to the appearance of a person but make subtle alterations to the photograph of a face in order to make the person appear more attractive.

The researchers, Tommer Leyvand et Yael Eisenthal, have created a set of rules on attractiveness for a software program, based on how people rated the appearance of faces in approximately 200 photographs. The software analyzed the images to measure distances, ratios, etc. another program was developed to apply the rules created by the first one.

The example is not Heidi Klum.

Source: NewScientistTech, two weeks ago.

Labels: exploits

Wednesday, August 23, 2006

Grid Computing to Predict Storm Surge

The SURA, the Southeastern Universities Research Association, recently gained more computing power for a computer grid that will be used for a number of research activities, including storm modeling.

The SURA, the Southeastern Universities Research Association, recently gained more computing power for a computer grid that will be used for a number of research activities, including storm modeling.First, what is a Computer Grid ?

Wikipedia says it's an emerging computing model that provides the ability to perform higher throughput computing by taking advantage of many networked computers to model a virtual computer architecture that is able to distribute process execution across a parallel infrastructure. Well, everybody has his own definition. The common idea is the comparison with the distribution of electricity. Indeed, the term Grid computing originated in the early 1990s as a metaphor for making computer power as easy to access as an electric power grid: the same way you plug a device on 110 or 220V, you plug your computer on the grid. Behind, a huge quantity of resources (CPUs and memories). Most of the time, it consists in networked computers, geographically distributed and autonomous. And hop, you have access to a phenomenal computing power and a amazing data storage capacity.

That's what happened to the SURA which have obtained new servers that are expected to double the number of CPUs in the heterogeneous environment on the grid to about 1,800, and increase the computing power to about 10 TFLOPS.

A previous article explained that the most powerful computer right now, Blue Gene, reached 360 teraFLOPS. Just to tell that 10 teraFLOPS, it's not so bad.

SURA has spent the past two and a half years building the grid, and is working to develop forecasting models that will allow scientists to accurately predict a storm surge 3 days before it begins to approach. Currently, scientists are accurate in their forecasts about 24 hours before a storm.

Source: Computerworld, two weeks ago.

For those who do not know:

- A central processing unit (CPU), or sometimes simply processor, is the component in a digital computer that interprets instructions and processes data contained in computer programs.

- for the FLOPS, see this article and try to follow ! ;)

Labels: discoveries

Monday, August 21, 2006

The rolling bot

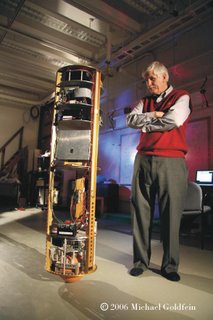

Ralph Hollis is a mobile robotics researcher.

Ralph Hollis is a mobile robotics researcher.Ralph Hollis is convinced that dynamically stable robots have more potential for integration into human environments than simply stables robots, also called "robots with legs".

And this is cool, in addition to the fact that we wonder where he could get this idea, it allows to robotics-fans to discover a robot moving on a simple ball.

Ballbot - it's obviously its name - is about the height and width of a person, weighs about 95 pounds, and balances and moves on a single urethane-coated metal sphere.

Main advantages: it can maneuver in small spaces because of its long and thin shape and because it does not have to face the direction it intends to move before it does so. And as opposed to what one can think, it's more stable on a slope than the traditional robots.

Internal sensors provide balancing data to an onboard computer, which uses the information to activate rollers that mobilize the ball on which it moves

Adding a head and arms could help it further in rotation and balance, but for Ralph Hellis, there are many hurdles to overcome: responding to unplanned contact with its surroundings, planning motion in cluttered spaces, and safety issues...

When Ballbot is not in operation, he stands on 3 legs which makes in fact a very pretty rack.

Source: The Robotics Institute, last friday.

Another picture here and another here.

Labels: exploits

Tuesday, August 15, 2006

A computer 10 millions times faster

The Department of Energy's Oak Ridge National Laboratory, and the company Cray announced a $200 million deal in June to complete the world’s most powerful computer in 2008.

The Department of Energy's Oak Ridge National Laboratory, and the company Cray announced a $200 million deal in June to complete the world’s most powerful computer in 2008.This supercomputer, that Cray already nicknames Baker, is gonna use AMD Opteron dual-core to reach a peak speed of 1 peta-FLOPS.

1 FLOPS is a speed measure for computers: an Floating Point Operation Per Second.

1 peta-FLOPS, this is a million of billions of FLOPS.

For comparison, this computer, on which you read this article, can execute about 100 millions FLOPS, i.e. 10 millions times less than Baker!

Baker will be 3 times quicker than Blue Gene, the most powerful according to Top500, the official list of the most quick supercomputers.

update, this wednesday 23/08/2006: one can read on this pagethat Japanese researchers have already broken the petaFLOPS barrier. But not officialy because it cannot run the software required by the official rankings. Nevertheless, it's a computer emerging from the biotechnology that has cost $9 million and which is already asked by pharmaceutical companies to test the thousands of chemical compounds that could become the next miracle drug, as well as the ways that each will interact with the trillions of proteins in the human body.

Source: FCW.com, last week.

For those who do not know:

- Cray Inc. is a supercomputer manufacturer based in Seattle, Washington. The company's predecessor, Cray Research, Inc. (CRI), was founded in 1972 by computer designer Seymour Cray.

- Supercomputers introduced in the 1960s were designed primarily by Seymour Cray at Control Data Corporation (CDC), and led the market into the 1970s until Cray left to form his own company, Cray Research. He then took over the supercomputer market with his new designs, holding the top spot in supercomputing for 5 years (1985–1990).

- A multi-core microprocessor is one which combines two or more independent processors into a single package, often a single integrated circuit (IC). A dual-core device contains only two independent microprocessors.

Labels: computers

Combine pictures to make a 3D-model

Working with several researchers from the University of Washington, Microsoft's Richard Szeliski has developed a technology that converts digital images into 3D model, allowing the user to move, walk or fly into the scene.

Working with several researchers from the University of Washington, Microsoft's Richard Szeliski has developed a technology that converts digital images into 3D model, allowing the user to move, walk or fly into the scene.It's called Photosynth, and it has been introduced (also) at the SIGGRAPH conference of Boston. The method consists in compiling unique features from different photographs and cross-referencing them against other images, looking for similarities.

That enables it to isolate a specific 3D position and then calculate the camera's location when the picture would have been taken.

"Basically, it's just a geometry problem. You are simultaneously adjusting the position of the camera and where those little pieces of images are, until everything snaps together"

The system can be used with only 2 pictures, but it's much more interesting when several dozen pictures are combined. Szeliski believes that cities and tourism boards, and even photo-sharing sites will soon use this technology. Flickr, Picasa, Talk a Pic ?

Here, a video is available but disappointing. It becomes an habit for Microsoft...

We take the opportunity to mention a great site. Let's imagine that you want to take a photograph of a building in the center of Beijing. Impossible to shot without a tourist or two, minimum. Shot anyway, without moving. Provide the pictures to Tourist Remover which, as indicated, is able to generate a picture with nobody.

Source: BBC News, two weeks ago.

Labels: discoveries

A spy in the keyboard

Peripheral devices such as keyboards, mouses, microphones, etc. could pose a serious problem of vulnerability, of security.

Peripheral devices such as keyboards, mouses, microphones, etc. could pose a serious problem of vulnerability, of security.At least, that's what believe researchers at the University of Pennsylvania. Using a device known as a JitterBug, they found that a hacker could physically spy a peripheral device and steal chunks of data by creating an all-but-imperceptible process running after a keystroke.

As a proof of concept, they built a functional JitterBug for keyboard, a real spy stuff. Of course, it's necessary to have, at one moment, a physical access to the target keyboard, just to install the device. But that's quite easy: in the worst case, you exchange the real keyboard by a similar but modified model.

Unlike existing keystroke loggers, you do not need to retrieve it to collect data. Indeed, the device can use any network-related application, as the e-mail or instant messaging to transmit data. Smaller, then with less storage space, but smarter: they can be configured to record only one type of data. For example, a Jitterbug that only works when the user types his name: one can expect that the following keystrokes would include the user's password!

Although there is no evidence that anyone has actually been using JitterBugs, there is no reason nobody never did it. Alarming scenario: a manufacturer of peripheral devices could be compromised, inundating the market with JitterBugged devices.

According to the researchers, a solution: cryptography...

Source: University of Pennsylvania, last monday.

Labels: discoveries

Friday, August 11, 2006

Shake, Shake, Shake ... Shake your picture

Researchers at the University of Toronto and MIT have developed a new image-processing technique that could prevent the blurriness resulting from photographs taken with a shaky hand.

Their method is based on a algorithm that computes the path taken by a shaky camera when the picture was shot, and then traces the path back to cancel the blurring... and make the picture better, as you can see on the picture here. Click on the picture to see more examples.

"It's the first time that the natural image statistics have been used successfully in deblurring images", says Rob Fergus from the MIT, the project leader who demonstrated the technology at the SIGGRAPH conference, last week. Each picture took 10 to 15 minutes to process using the technique. A technique which employs a universal statistical property that characterizes transitions from light to dark.

Blurry images have contrasting gradients, which Fergus' technique uses to estimate how the camera moved. The process then generates what is called a "blur kernel", which reveals where the camera was shooting when the image was taken.

There are several available products that aim to cancel the effects of photos taken with unsteady hands, but they only eliminate blurriness to a limited degree, while this work addresses more complex patterns of motion.

Source: CNet news.com, yesterday.

For those who do not know:

- LThe Massachusetts Institute of Technology, or MIT, is a private research university located in the city of Cambridge, Massachusetts, USA. Its mission and culture are guided by an emphasis on teaching and research grounded in practical applications of science and technology.

- SIGGRAPH (short for Special Interest Group on Graphics and Interactive Techniques) is the name of the annual conference on computer graphics (CG) convened by the ACM SIGGRAPH organization. The first SIGGRAPH conference was in 1974. The conference is attended by tens of thousands of computer professionals, and has most recently been held in Boston. Past SIGGRAPH conferences have been held in Dallas, Seattle, Los Angeles, New Orleans, San Diego and elsewhere across the United States.

Their method is based on a algorithm that computes the path taken by a shaky camera when the picture was shot, and then traces the path back to cancel the blurring... and make the picture better, as you can see on the picture here. Click on the picture to see more examples.

"It's the first time that the natural image statistics have been used successfully in deblurring images", says Rob Fergus from the MIT, the project leader who demonstrated the technology at the SIGGRAPH conference, last week. Each picture took 10 to 15 minutes to process using the technique. A technique which employs a universal statistical property that characterizes transitions from light to dark.

Blurry images have contrasting gradients, which Fergus' technique uses to estimate how the camera moved. The process then generates what is called a "blur kernel", which reveals where the camera was shooting when the image was taken.

There are several available products that aim to cancel the effects of photos taken with unsteady hands, but they only eliminate blurriness to a limited degree, while this work addresses more complex patterns of motion.

Source: CNet news.com, yesterday.

For those who do not know:

- LThe Massachusetts Institute of Technology, or MIT, is a private research university located in the city of Cambridge, Massachusetts, USA. Its mission and culture are guided by an emphasis on teaching and research grounded in practical applications of science and technology.

- SIGGRAPH (short for Special Interest Group on Graphics and Interactive Techniques) is the name of the annual conference on computer graphics (CG) convened by the ACM SIGGRAPH organization. The first SIGGRAPH conference was in 1974. The conference is attended by tens of thousands of computer professionals, and has most recently been held in Boston. Past SIGGRAPH conferences have been held in Dallas, Seattle, Los Angeles, New Orleans, San Diego and elsewhere across the United States.

Labels: discoveries

Wednesday, August 09, 2006

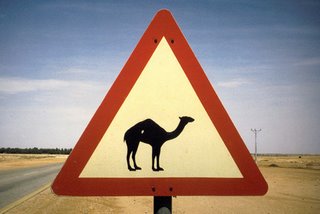

Siemens VDO, it's a sign...

Siemens VDO has developed a system that can recognize speed limiting road signs.

Siemens VDO has developed a system that can recognize speed limiting road signs.Very well. Now, one has to find an useful application. The limit speed can be displayed on the dashboard. hum.

The limit speed can be sent to the adaptive cruise control system (ACC), which automatically reduce or increase the vehicle's speed. This is better.

The system works with a video camera linked to an embedded computer running a TSR (Traffic Sign Recognizing) software.

This invention is categorized in "extraordinary stuff with low interest/cost ratio". One can bet that the driver will deactivate it most of the time. But if it allows to increase road safety, then we're in...

Road safety, or more exactly driver assistance, here is a market where Siemens VDO takes a big place. The system is set to enter volume production in 2008.

Source: Automotive Design Line, yesterday.

Labels: navigation

archives >> April - March - February - January -December - November - October - September - August - July - June - May

Powered by Stuff-a-Blog

une page au hasard